Convolution reverb sampled from real rooms is considered the gold standard for realistic reverb. But they're not perfect. So I've decided to use Wave-Based simulations, the crème de la crème of virtual acoustics, to solve issues and overcome limitations that come from sampling reverb in the real world.

A plugin is planned, but custom IR sets can be delivered right now. If you're a virtual instrument developer, check out my Henka Kernels.

Here's how it sounds :

A plugin is planned, but custom IR sets can be delivered right now. If you're a virtual instrument developer, check out my Henka Kernels.

Here's how it sounds :

Grand Piano

DRY

REVERB

French Horns

DRY

REVERB

THE CONCEPT

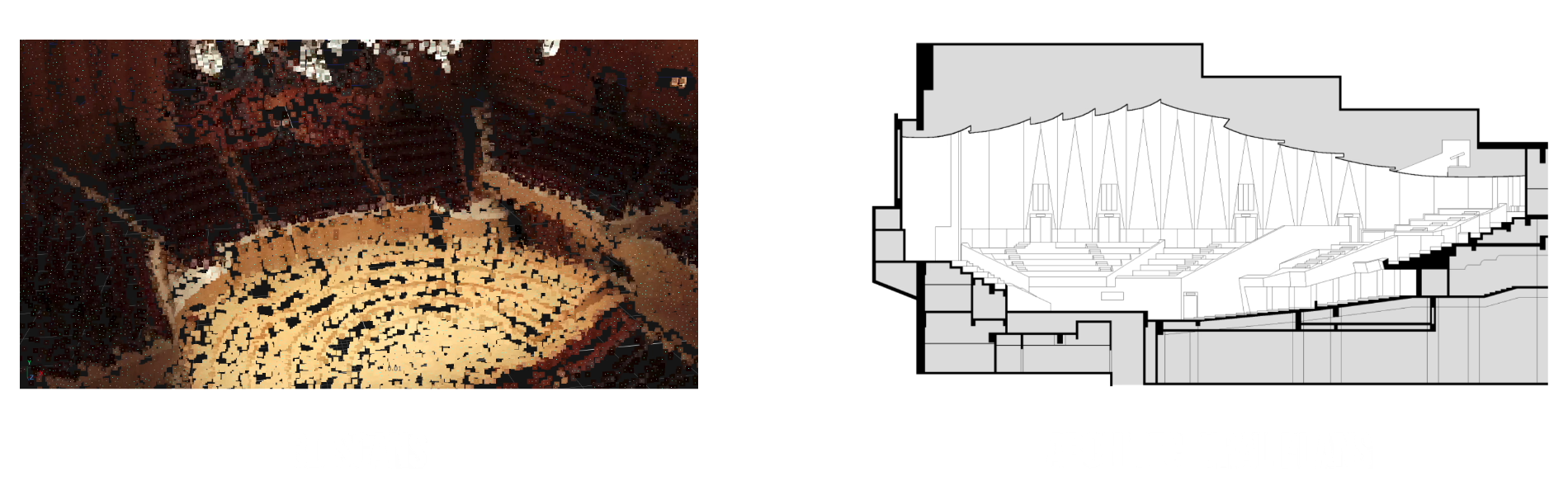

It starts with a detailed 3D model, faithful to the space we want to model the reverb of. The dimensions can be determined from 3D scans, architectural plans, or a combination of both :

Then, a 3D model specifically optimized for Wave-Based simulation is created, with each surface assigned the correct acoustical properties. Wave-Based acoustics solve the wave equation, and although this makes the simulation very accurate, it requires a tremendous amount of computational power. So after placing the source and the microphone in the virtual space, an Impulse Response (IR) can be rendered so that the reverb can be loaded into your DAW, and applied in real-time.

The goal of this method is to overcome the physical limitations and unwanted artifacts that come from sampling reverb in the real world, while still giving a reverb that sounds realistic. To my ears, it is by far the most natural sounding reverb I've ever used.

The goal of this method is to overcome the physical limitations and unwanted artifacts that come from sampling reverb in the real world, while still giving a reverb that sounds realistic. To my ears, it is by far the most natural sounding reverb I've ever used.

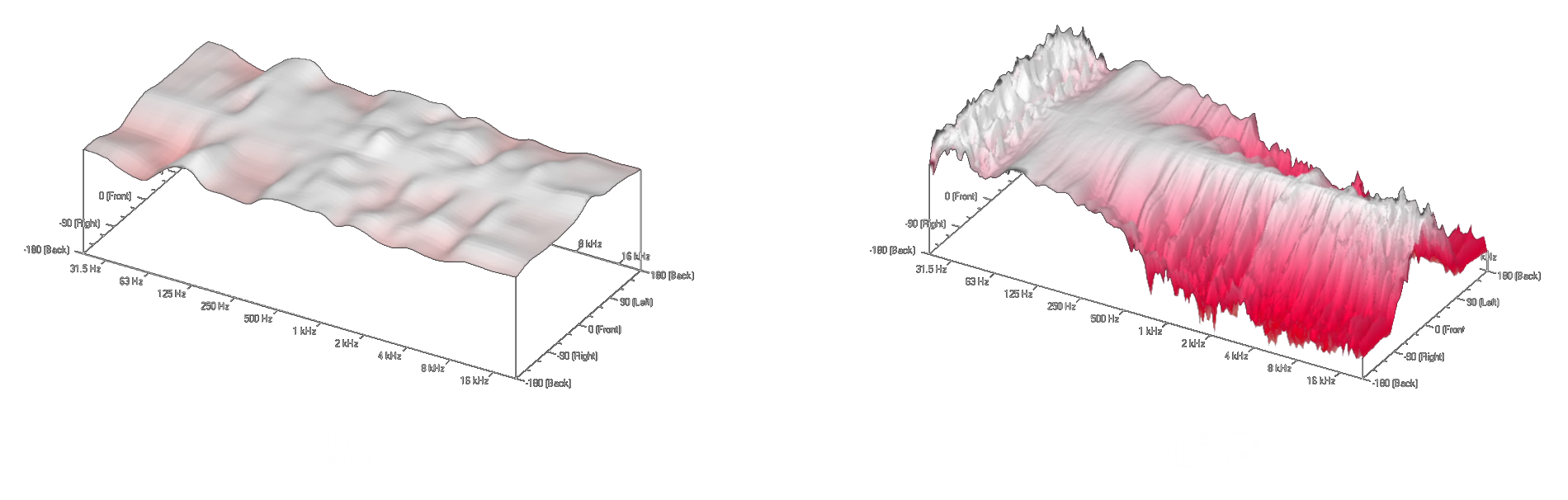

INSTRUMENT SPECIFIC DIRECTIVITY

Above are two horizontal directivity maps. The vertical axis represents amplitude. The harp, a very omnidirectional instrument, has a consistent amplitude no matter the angle or the frequency. But a speaker gets directional in the high-end, the amplitude drops off-axis. This impacts how reverb sounds in the room : With a harp, all reflections in the room will be equally as bright. With a speaker, reflections from the sides and the back of the room will be darker.

Wave-Based reverb bypasses these issues by using a virtual source that can have any directivity pattern we want, including the one from a musical instrument.

Wave-Based reverb bypasses these issues by using a virtual source that can have any directivity pattern we want, including the one from a musical instrument.

PERFECT FREQUENCY RESPONSE

The frequency response of a speaker comprises of both its magnitude and phase responses, which means how loud and how shifted in time each frequency will be. When sampling reverb with a speaker, we usually want this frequency response to be as transparent as possible, as we want to capture the sound of the space, not the sound of the speaker. Correcting the magnitude response is possible to a somewhat acceptable degree, although it is quite difficult, and pretty much never done correctly (or at all) in convolution reverb plugins.

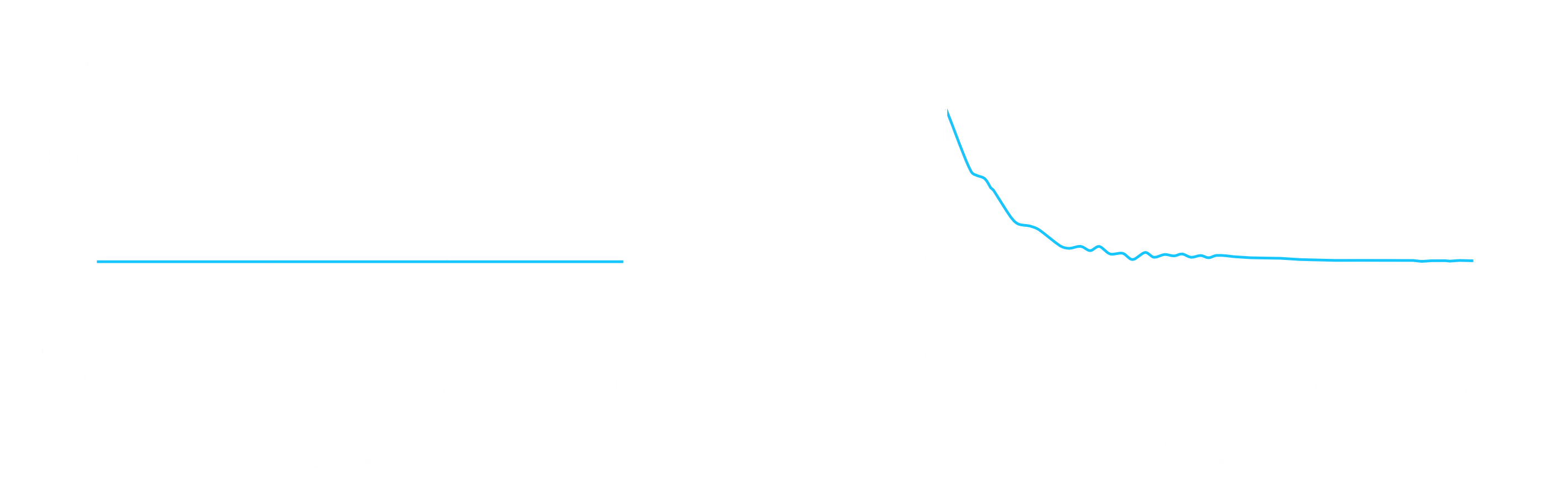

On top of that, correcting the phase response isn't really possible without introducing artifacts that will wreck the overall transient response of the speaker with pre-ringing artifacts. This is because speakers are not minimum phase throughout all frequencies. This can be seen in excess group delay graphs :

On top of that, correcting the phase response isn't really possible without introducing artifacts that will wreck the overall transient response of the speaker with pre-ringing artifacts. This is because speakers are not minimum phase throughout all frequencies. This can be seen in excess group delay graphs :

Wave-Based reverb solves these issues by using a virtual source that can have any frequency response we want, including a completely flat, transparent one.

FIELD SEPARATION DONE RIGHT

Field separation is an important part of convolution reverb, as it allows you to control the different elements of the impulse response. The ratio between the early reflections and reverb tail is important to control the size and depth of the reverb. Having control over the direct sound is also important if you want to simply add reverb, or recreate a virtual room mic.

Sampled convolution reverbs can only do this separation in time. The issue is that these elements usually overlap, so it's impossible to cut them properly with this method. This is also a challenge with Wave-Based simulations that don't trace the path of each reflection. But I use a hybrid model that uses Geometrical Acoustics that help solve this field separation without artifacts.

This gives an unprecedented amount of control and creative freedom that sampled convolution reverbs simply can't achieve.

Sampled convolution reverbs can only do this separation in time. The issue is that these elements usually overlap, so it's impossible to cut them properly with this method. This is also a challenge with Wave-Based simulations that don't trace the path of each reflection. But I use a hybrid model that uses Geometrical Acoustics that help solve this field separation without artifacts.

This gives an unprecedented amount of control and creative freedom that sampled convolution reverbs simply can't achieve.

AND MORE

- Virtual microphone emulation, from modern designs to vintage models, and perfectly transparent ones that can't exist in the real world. Magnitude response and frequency dependent directivity are modeled.

- Full 24-bit dynamic range (~144dB). No quantization errors, and no early cutoff unlike regular convolution reverbs. This gives more room for increasing the reverb time or doing heavy compression without hearing artifacts.

- No low-frequency bleed from the direct sound into the reverb.

- No first reflections cutoff when direct sound is removed.

- Full bandwidth (1Hz to 20kHz). It is technically possible to go above 20kHz so that time-stretching the IR is of higher quality. But because of the Courant-Friedrichs-Lewy condition, render times will increase drastically. A render that takes 10 hours at a sample rate of 48kHz will take 160 hours at 96kHz.

To order custom Wave-Based impulse responses, or if you have any questions :

acoustics@tomiwailee.comWHY AM I NOT SELLING IR PACKS ?

Because ultimately, I want to create a plugin with a specific workflow so that you can easily achieve the sound you're looking for, and with a reasonable price.

So in the meantime, I'm happy to create custom IRs so that I can tailor the reverb exactly how you want, with the room and the instruments/microphones placements of your choice. A level of personalization that will not be possible in a plugin made for all. It also means that I can also work for your particular workflow/DAW/plugins so that the reverb can be used in the best conditions.

So in the meantime, I'm happy to create custom IRs so that I can tailor the reverb exactly how you want, with the room and the instruments/microphones placements of your choice. A level of personalization that will not be possible in a plugin made for all. It also means that I can also work for your particular workflow/DAW/plugins so that the reverb can be used in the best conditions.